Sign Language Synthesis: Current Signing Avatar Systems and Representation

Jan 1, 2024· ,,,,·

0 min read

,,,,·

0 min read

Víctor Ubieto

Jaume Pozo

Eva Valls

Beatriz Cabrero-Daniel

Josep Blat

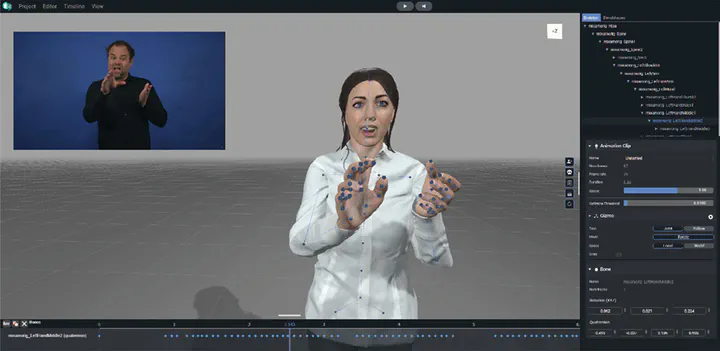

Animics’ keyframe animation window view.

Animics’ keyframe animation window view.Abstract

Animating avatars for sign languages (SLs) is a challenging task due to the high quality and naturalness requirements. Recent publications and media show that virtual avatar quality is evolving, yet remain greatly constrained by the predefined set of animations available, crafted by skillful animators. In this research, we propose two different but complementary systems, focusing on sign animations synthesis and scalability. The first uses Behaviour Markup Language (BML) to drive the avatar procedurally from textual instructions, derived from HamNoSys, SiGML and the Facial Action Coding System (FACS). Several techniques are employed for driving the avatar such as geometric inverse kinematics and blendshape weight modulation. The second system resorts on widespread low-end technology such as webcams to generate 3D animations from simple video by applying different Machine Learning (ML) techniques. In our implementation, both systems are coupled with an editor for tweaking the resulting 3D animations. It also provides a point-and-click interface for generating BMLs through sliders and boxes that can be greatly adjusted in time and duration. Hence, users can generate new content adapted to their circumstances without the need of specialised equipment or knowledge.

Type

Publication

Sign Language Machine Translation